Gunicorn vs Python GIL

What is the Python GIL, how it works, and how it affects gunicorn.

What Gunicorn worker type should I choose for production?

This is a recurring question I get asked and something I tried to explain in a previous article.

After discussing the topic with other people I understood that the article was a bit incomplete without exposing the internals of Python that directly influence how Gunicorn serves requests.

As we saw in that article, Python has a global lock (GIL) that only allows one single thread to be running (i.e. interpreting bytecode).

In my opinion, understanding how python handles concurrency is essential if you want to optimize your python services.

Python and gunicorn offer different ways for you to handle concurrency and since there’s no silver bullet to cover all use cases, it’s good to know what are the options, tradeoffs, and strengths for each option.

Gunicorn worker types

Gunicorn exposes those different options with the concept of “workers types”.

Each type will have work best for a specific set of uses cases.

- sync — forks the process into N processes that run in parallel to serve requests

- gthread — spawns N threads to concurrently serve requests

- eventlet/gevent: spawns green threads to concurrently serve requests

Gunicorn sync worker

This isthe simplest worker type where the only concurrency option is forking N processes that will serve requests in parallel.

They work well enough but incur a lot of overhead (memory and CPU context switching for example) and won’t scale well if most of your total request time is waiting for I/O.

Gunicorn gthread worker

The gthread worker improves on that by allowing you to create N threads for each process. This improves I/O performance since you can have more instances of your code running concurrently. This is the only one of the four that is affected by the GIL.

Gunicorn eventlet and gevent workers

The eventlet/gevent workers try to improve even more on the gthread model by running lightweight user threads (aka green threads, greenlets, etc). This allows you to have thousands of said greenlets at a fraction of the cost when compared to system threads.

The other difference is that it follows a cooperative working model instead of preemptive, allowing uninterrupted work until they block.

We will start by analyzing how the gthread worker behaves when serving requests and how it is affected by the GIL.

How Python schedules threads using a global lock (GIL)

Unlike the sync worker where each request is served by a process directly, with the gthread, you have N threads for each process in order to better scale without the overhead of spawning multiple processes.

Since you’re running multiple threads inside the same process, the GIL will prevent them from running in parallel.

The (in)famous GIL is not a process or a special thread. It’s just a boolean variable whose access is protected by a mutex that is used to make sure only one single thread is running inside each process.

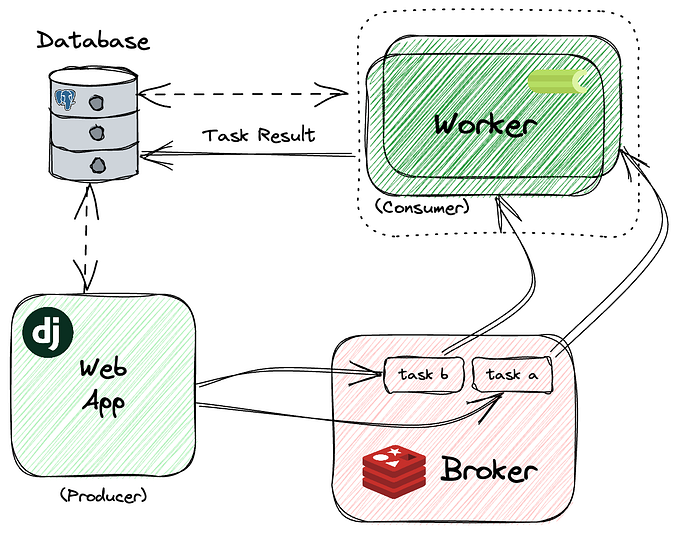

The way it works can be seen in the above diagram.

In this example, we can see that we have 2 system threads running concurrently to serve 1 request each. The flow goes like this:

- Thread A holds the GIL starts serving a request

- After a bit, Thread B tries to serve a request but can’t hold the GIL.

- B sets a

timeoutto force the GIL release if that doesn’t happen until a timeout is reached - A doesn’t release the GIL before the timeout is reached.

- B sets

gil_drop_requestflag to force A to release the GIL immediately. - A releases the GIL and will wait until another thread grabs the GIL to avoid a scenario where A would continuously release and grab the GIL without other threads being able to grab it.

- B starts running

- B releases the GIL while blocking on I/O

- A starts running

- B tries to run again but gets suspended

- A finishes before the timeout is reached

- B finishes running

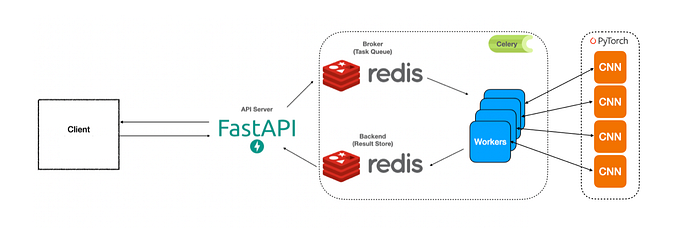

The same scenario, but using gevent

The other option to increase concurrency without using processes is using greenlets. This worker will spawn “user threads” instead of “system threads” to increase concurrency.

Although this means they are not affected by the GIL, it also means you still can’t increase parallelism since they can’t be scheduled by the CPU in parallel.

- Greenlet A will start running until an I/O event or finishes executing

- Greenlet B will wait until Greenlet A releases the event loop

- A finishes

- B starts

- B releases the event loop to wait on I/O

- B finishes

For this scenario, it’s clear that having a greenlet-type worker is not ideal. We end up having the second request wait until the first finishes just to sit idle again waiting on I/O.

Where greenlets usually shine is when you have A LOT of concurrent requests that mostly wait on I/O.

In those scenarios, the greenlet cooperative model really shines since you don’t waste time with context switching and avoid the overhead of running multiple system threads.

We’ll witness that with our benchmarks at the end of this article.

Now, this begs the following questions:

- Does changing the thread context switch timeout affect the service latency and throughput?

- How to choose between gevent/eventlet and gthread when you have a mix of I/O and CPU work

- How to choose the number of threads with the gthread worker

- Should I just use the sync worker and increase the number of forked processes to avoid the GIL?

To answer those questions, you will need to have monitoring in place to collect the necessary metrics and then run tailored benchmarks for those same metrics.

It’s useless to run synthetic benchmarks that have zero correlation to your real-world usage patterns

The following pictures show the latency and throughput metrics for different scenarios in order to give you a sense of how this all works together.

Benchmarking the GIL switch interval

Here we can see how changing the GIL thread switch interval/timeout affects request latency (the default is 5ms).

As expected, IO latency gets better with lower switch intervals. This happens because the CPU-bound threads are forced to release the GIL more often and allow other threads to do their work.

But it’s not a silver bullet. Decreasing the switching interval will make the CPU-bound threads take longer to finish.

We can also see an increase in total latency with lower timeouts that happen due to the increased overhead of constant thread switching.

If you want to try it yourself, you can change the switching interval using this code:

Benchmarking gthread vs gevent latency with CPU-bound requests

In general, we can see that the benchmark reflects the intuition created by our previous analysis on how GIL-bound threads and greenlets work.

- gthread has a better average latency for IO-bound requests thanks to the switch interval forcing long-running threads to release

- gevent CPU-bound requests have better latency vs gthread since they are not interrupted to serve other requests

Benchmarking gthread vs gevent throughput with CPU-bound requests

Here the results also reflect our previous intuition of gevent having better throughput than gthread.

These benchmarks are highly dependant on the type of work done and don’t necessarily translate directly to your use case.

The main objective of these benchmarks is to give you some guidelines on what to test and measure in order to maximize each CPU core that will be serving requests.

Since all gunicorn workers allow you to specify the number of processes that will run, what changes is how each process handles concurrent connections. So make sure you use the same amount of workers to make the tests fair.

Now let’s try to answer the previous questions using the data gathered from our benchmarks.

Does changing the thread context switch timeout affect the service latency and throughput?

It does. However, it’s not a game-changer for the vast majority of workloads.

How to choose between gevent/eventlet and gthread when you have a mix of I/O and CPU work?

As we saw, ghtread tends to allow better concurrency when you have more CPU-bound work.

How to choose the number of threads with the gthread worker?

It depends. But as long as your benchmark is able to simulate a production-like behavior, you’ll clearly see a peak performance before it starts to degrade due to too many threads.

Should I just use the sync worker and increase the number of forked processes to avoid the GIL?

Unless you have almost zero I/O, scaling with only processes is not optimal.

Conclusions

- Coroutines/Greenlets can be more CPU efficient since they avoid interruptions and context switching between threads.

- Coroutines trade latency in favor of throughput.

- If you have a mix of IO and CPU-bound endpoints, coroutines can result in less predictable latencies — CPU-bound endpoints won’t be interrupted to serve other incoming requests.

- The GIL is not a problem if you take the time to properly configure gunicorn.